Forcing (mathematics)

In the mathematical discipline of set theory, forcing is a technique invented by Paul Cohen for proving consistency and independence results. It was first used, in 1963, to prove the independence of the axiom of choice and the continuum hypothesis from Zermelo–Fraenkel set theory. Forcing was considerably reworked and simplified in the 1960s, and has proven to be an extremely powerful technique both within set theory and in areas of mathematical logic such as recursion theory.

Descriptive set theory uses both the notion of forcing from recursion theory as well as set theoretic forcing. Forcing has also been used in model theory but it is common in model theory to define genericity directly without mention of forcing.

Intuitions

Forcing is equivalent to the method of Boolean-valued models, which some feel is conceptually more natural and intuitive, but usually much more difficult to apply.

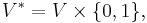

Intuitively, forcing consists of expanding the set theoretical universe V to a larger universe V*. In this bigger universe, for example, one might have lots of new subsets of ω = {0,1,2,…} that were not there in the old universe, and thereby violate the continuum hypothesis. While impossible on the face of it, this is just another version of Cantor's paradox about infinity. In principle, one could consider

identify  with

with  , and then introduce an expanded membership relation involving the "new" sets of the form

, and then introduce an expanded membership relation involving the "new" sets of the form  . Forcing is a more elaborate version of this idea, reducing the expansion to the existence of one new set, and allowing for fine control over the properties of the expanded universe.

. Forcing is a more elaborate version of this idea, reducing the expansion to the existence of one new set, and allowing for fine control over the properties of the expanded universe.

Cohen's original technique, now called ramified forcing, is slightly different from the unramified forcing expounded here.

Forcing posets

A forcing poset is an ordered triple

- (P, ≤, 1)

where "≤" is a preorder on P that satisfies following splitting condition

- For all p ∈ P, there are q, r ∈ P such that q, r ≤ p with no s ∈ P such that s ≤ q, r

and 1 is a largest element, that is,

- p ≤ 1 for all p ∈ P,.

Members of P are called conditions. One reads

- p ≤ q

as

- p is stronger than q.

Intuitively, the "smaller" condition provides "more" information, just as the smaller interval [3.1415926,3.1415927] provides more information about the number π than the interval [3.1,3.2] does.

(There are various conventions here. Some authors require "≤" to also be antisymmetric, so that the relation is a partial order. Some use the term partial order anyway, conflicting with standard terminology, while some use the term preorder. The largest element can be dispensed with. The reverse ordering is also used, most notably by Saharon Shelah and his co-authors.)

Associated with a forcing poset P are the P-names. P-names are sets of the form

- {(u,p):u is a P-name and p ∈ P and (some criterion involving u and p)}.

This definition is circular; which in set theory means it is really a definition by transfinite recursion. In long form, one defines

-

-

- Name(0) = {};

- Name(α + 1) = a well-defined subset of the power set of (Name(α) × P);

- Name(λ) = ∪{Name(α) : α < λ}, for λ a limit ordinal,

-

and then defines the class of P-names to be

- V(P) = ∪{Name(α) : α is an ordinal}.

The P-names are, in fact, an expansion of the universe. Given x in V, one defines

- xˇ

to be the P-name

- {(yˇ,1) : y ∈ x}.

Again, this is really a definition by transfinite recursion.

Given any subset G of P, one next defines the interpretation or valuation map from names by

- val(u, G) = {val(v, G) : ∃ p ∈ G , (v, p) ∈ u}.

(Again a definition by transfinite recursion.) Note that if 1 is in G, then

- val(xˇ, G) = x.

One defines

- G = {(pˇ, p) : p ∈ G},

then

- val(G,G) = G.

A good example of a forcing poset is

- (Bor(I) , ⊆ , I ),

where I = [0,1] and Bor(I) are the Borel subsets of I having non-zero Lebesgue measure. In this case, one can talk about the conditions as being probabilities, and a Bor(I)-name assigns membership in a probabilistic sense. Because of the ready intuition this example can provide, probabilistic language is sometimes used with other forcing posets.

Countable transitive models and generic filters

The key step in forcing is, given a ZFC universe V, to find appropriate G not in V. The resulting class of all interpretations of P-names will turn out to be a model of ZFC, properly extending the original V (since G∉V).

Instead of working with V, one considers a countable transitive model M with (P,≤,1) ∈ M. By model, we mean a model of set theory, either of all of ZFC, or a model of a large but finite subset of the ZFC axioms, or some variant thereof. Transitivity means that if x ∈ y ∈ M, then x ∈ M. The Mostowski collapsing theorem says this can be assumed if the membership relation is well-founded. The effect of transitivity is that membership and other elementary notions can be handled intuitively. Countability of the model relies on the Löwenheim–Skolem theorem.

Since M is a set, there are sets not in M – this follows from Russell's paradox. The appropriate set G to pick, and adjoin to M, is a generic filter on P. The filter condition means that G⊆P and

-

- 1 ∈ G ;

- if p ≥ q ∈ G, then p ∈ G ;

- if p,q ∈ G, then ∃r ∈ G, r ≤ p and r ≤ q ;

For G to be generic means

-

- if D ∈ M is a dense subset of P (that is, p ∈ P implies ∃q ∈ D, q ≤ p) then G∩D ≠ 0 .

The existence of a generic filter G follows from the Rasiowa–Sikorski lemma. In fact, slightly more is true: given a condition p ∈ P, one can find a generic filter G such that p ∈ G. Due to the splitting condition, if G is filter, then P\G is dense. If G is in M then P\G is in M because M is model of set theory. By this reason, generic filter is never in M.

Forcing

Given a generic filter G⊆P, one proceeds as follows. The subclass of P-names in M is denoted M(P). Let M[G]={val(u,G):u∈M(P)}. To reduce the study of the set theory of M[G] to that of M, one works with the forcing language, which is built up like ordinary first-order logic, with membership as binary relation and all the names as constants.

Define p  φ(u1,…,un) (read "p forces φ in model M with poset P") where p is a condition, φ is a formula in the forcing language, and the ui are names, to mean that if G is a generic filter containing p, then M[G] ⊨ φ(val(u1,G),…,val(un,G)). The special case 1

φ(u1,…,un) (read "p forces φ in model M with poset P") where p is a condition, φ is a formula in the forcing language, and the ui are names, to mean that if G is a generic filter containing p, then M[G] ⊨ φ(val(u1,G),…,val(un,G)). The special case 1  φ is often written P

φ is often written P  φ or

φ or  φ. Such statements are true in M[G] no matter what G is.

φ. Such statements are true in M[G] no matter what G is.

What is important is that this "external" definition of the forcing relation p  φ is equivalent to an "internal" definition, defined by transfinite induction over the names on instances of u ∈ v and u = v, and then by ordinary induction over the complexity of formulas. This has the effect that all the properties of M[G] are really properties of M, and the verification of ZFC in M[G] becomes straightforward. This is usually summarized as three key properties:

φ is equivalent to an "internal" definition, defined by transfinite induction over the names on instances of u ∈ v and u = v, and then by ordinary induction over the complexity of formulas. This has the effect that all the properties of M[G] are really properties of M, and the verification of ZFC in M[G] becomes straightforward. This is usually summarized as three key properties:

- Truth: M[G] ⊨ φ(val(u1,G),…,val(un,G)) if and only if it is forced by G, that is, for some condition p ∈ G, p

φ(u1,…,un).

φ(u1,…,un). - Definability: The statement "p

φ(u1,…,un)" is definable in M.

φ(u1,…,un)" is definable in M. - Coherence: If p

φ(u1,…,un) and q ≤ p, then q

φ(u1,…,un) and q ≤ p, then q  φ(u1,…,un).

φ(u1,…,un).

We define the forcing relation in V by induction on complexity, in which we simultaneously define forcing of atomic formulas by ∈-induction.

1. p  a ∈ b if for any q ≤ p there is r ≤ q such that there is (s, c) ∈ b such that r ≤ s and r

a ∈ b if for any q ≤ p there is r ≤ q such that there is (s, c) ∈ b such that r ≤ s and r  a = c.

a = c.

2. p  a = b if p

a = b if p  a ⊆ b and p

a ⊆ b and p  b ⊆ a

b ⊆ a

- where

- p

a ⊆ b if for all q ≤ p and for all (r,c) ∈ a if q ≤ r then q

a ⊆ b if for all q ≤ p and for all (r,c) ∈ a if q ≤ r then q  c ∈ b.

c ∈ b.

3. p  ¬ f if there is no q ≤ p such that q

¬ f if there is no q ≤ p such that q  f.

f.

4. p  f ∧ g if p

f ∧ g if p  f and p

f and p  g.

g.

5. p  ∀ x f if p

∀ x f if p  f(a) for any name a where f(a) is result of replacing all free occurrences of x in f by a.

f(a) for any name a where f(a) is result of replacing all free occurrences of x in f by a.

In 1–5 p is an arbitrary condition. In 1 and 2 a and b are arbitrary names and in 3–5 f and g are arbitrary formulas. This definition provides the possibility of working in V without any countable transitive model M. The following statement gives announced definability:

p  f if and only if M ⊨ p

f if and only if M ⊨ p  f.

f.

(Where no confusion is possible we simply write  .)

.)

Consistency

The above can be summarized by saying the fundamental consistency result is that given a forcing poset P, we may assume that there exists a generic filter G, not in the universe V, such that V[G] is again a set theoretic universe, modelling ZFC. Furthermore, all truths in V[G] can be reduced to truths in V regarding the forcing relation.

Both styles, adjoining G to a countable transitive model M or to the whole universe V, are commonly used. Less commonly seen is the approach using the "internal" definition of forcing, and no mention of set or class models is made. This was Cohen's original method, and in one elaboration, it becomes the method of Boolean-valued analysis.

Cohen forcing

The simplest nontrivial forcing poset is ( Fin(ω,2) , ⊇ , 0 ), the finite partial functions from ω to 2={0,1} under reverse inclusion. That is, a condition p is essentially two disjoint finite subsets p−1[1] and p−1[0] of ω, to be thought of as the "yes" and "no" parts of p, with no information provided on values outside the domain of p. q is stronger than p means that q ⊇ p, in other words, the "yes" and "no" parts of q are supersets of the "yes" and "no" parts of p, and in that sense, provide more information.

Let G be a generic filter for this poset. If p and q are both in G, then p∪q is a condition, because G is a filter. This means that g=⋃G is a well-defined partial function from ω to 2, because any two conditions in G agree on their common domain.

g is in fact a total function. Given n ∈ ω, let Dn={ p : p(n) is defined }, then Dn is dense. (Given any p, if n is not in p’s domain, adjoin a value for n, the result is in Dn.) A condition p ∈ G∩Dn has n in its domain, and since p ⊆ g, g(n) is defined.

Let X=g−1[1], the set of all "yes" members of the generic conditions. It is possible to give a name for X directly. Let X = { ( nˇ , p ) : p(n)=1 }, then val( X , G ) = X. Now suppose A⊆ω in V. We claim that X≠A. Let DA = { p : ∃n, n∈dom(p) and p(n)=1 if and only if n∉A }. DA is dense. (Given any p, if n is not in p’s domain, adjoin a value for n contrary to the status of "n∈A".) Then any p∈G∩DA witnesses X≠A. To summarize, X is a new subset of ω, necessarily infinite.

Replacing ω with ω×ω2, that is, consider instead finite partial functions whose inputs are of the form (n,α), with n<ω and α<ω2, and whose outputs are 0 or 1, one gets ω2 new subsets of ω. They are all distinct, by a density argument: given α<β<ω2, let Dα,β={p:∃n, p(n,α)≠p(n,β)}, then each Dα,β is dense, and a generic condition in it proves that the αth new set disagrees somewhere with the βth new set.

This is not yet the falsification of the continuum hypothesis. One must prove that no new maps have been introduced which map ω onto ω1 or ω1 onto ω2. For example, if one considers instead Fin(ω,ω1), finite partial functions from ω to ω1, the first uncountable ordinal, one gets in V[G] a bijection from ω to ω1. In other words, ω1 has collapsed, and in the forcing extension, is a countable ordinal.

The last step in showing the independence of the continuum hypothesis, then, is to show that Cohen forcing does not collapse cardinals. For this, a sufficient combinatorial property is that all of the antichains of this poset are countable.

The countable chain condition

An antichain A of P is a subset such that if p and q are in A, then p and q are incompatible (written p ⊥ q), meaning there is no r in P such that r ≤ p and r ≤ q. In the Borel sets example, incompatibility means p∩q has measure zero. In the finite partial functions example, incompatibility means that p∪q is not a function, in other words, p and q assign different values to some domain input.

P satisfies the countable chain condition (c.c.c.) if every antichain in P is countable. (The name, which is obviously inappropriate, is a holdover from older terminology. Some mathematicians write "c.a.c." for "countable antichain condition".)

It is easy to see that Bor(I) satisfies the c.c.c., because the measures add up to at most 1. Fin(E,2) is also c.c.c., but the proof is more difficult.

Given an uncountable subfamily W ⊆ Fin(E,2), shrink W to an uncountable subfamily W0 of sets of size n, for some n<ω. If p(e1)=b1 for uncountably many p ∈ W0, shrink to this uncountable subfamily W1, and repeat, getting a finite set { (e1,b1) , … , (ek,bk) }, and an uncountable family Wk of incompatible conditions of size n−k such that every e is in at most countably many dom(p) for p ∈ Wk. Now pick an arbitrary p ∈ Wk, and pick from Wk any q that is not one of the countably many members that have a domain member in common with p. Then p ∪ { (e1,b1) , … , (ek,bk) } and q ∪ { (e1,b1) , … , (ek,bk) } are compatible, so W is not an antichain. In other words, Fin(E,2) antichains are countable.

The importance of antichains in forcing is that for most purposes, dense sets and maximal antichains are equivalent. A maximal antichain A is one that cannot be extended and still be an antichain. This means every element of p ∈ P is compatible with some member of A. Their existence follows from Zorn's lemma. Given a maximal antichain A, let D = { p : p≤q, some q∈A }. D is dense, and G∩D≠0 if and only if G∩A≠0. Conversely, given a dense set D, Zorn's lemma shows there exists a maximal antichain A⊆D, and then G∩D≠0 if and only if G∩A≠0.

Assume P is c.c.c. Given x,y ∈ V, with f:x→y in V[G], one can approximate f inside V as follows. Let u be a name for f (by the definition of V[G]) and let p be a condition which forces u to be a function from x to y. Define a function F whose domain is x by F(a) = { b : ∃ q ≤ p, q forces u(aˇ) = bˇ }. By definability of forcing, this definition makes sense within V. By coherence of forcing, different b’s come from incompatible p’s. By c.c.c., F(a) is countable.

In summary, f is unknown in V, since it depends on G, but it is not wildly unknown for a c.c.c. forcing. One can identify a countable set of guesses for what the value of f is at any input, independent of G.

This has the following very important consequence. If in V[G], f:α→β is a surjection from one infinite ordinal to another, then there is a surjection g:ω×α→β in V and consequently a surjection h:α→β in V. In particular, cardinals cannot collapse. The conclusion is that 2ℵ₀ ≥ ℵ2 in V[G].

Easton forcing

The exact value of the continuum in the above Cohen model, and variants like Fin(ω × κ , 2) for cardinals κ in general, was worked out by Robert M. Solovay, who also worked out how to violate GCH (the generalized continuum hypothesis), for regular cardinals only, a finite number of times. For example, in the above Cohen model, if CH holds in V, then 2ℵ₀ = ℵ2 holds in V[G].

W. B. Easton worked out the infinite and proper class version of violating the GCH for regular cardinals, basically showing the known restrictions (monotonicity, Cantor's theorem, and König's theorem) were the only ZFC provable restrictions. See Easton's theorem.

Easton's work was notable in that it involved forcing with a proper class of conditions. In general, the method of forcing with a proper class of conditions will fail to give a model of ZFC. For example, Fin ( ω × On , 2 ), where "On" is the proper class of all ordinals, will make the continuum a proper class. Fin ( ω , On ) will introduce a countable enumeration of the ordinals. In both cases, the resulting V[G] is visibly not a model of ZFC.

At one time, it was thought that more sophisticated forcing would also allow arbitrary variation in the powers of singular cardinals. But this has turned out to be a difficult, subtle and even surprising problem, with several more restrictions provable in ZFC, and with the forcing models depending on the consistency of various large cardinal properties. Many open problems remain.

Random reals

In the Borel sets ( Bor(I) , ⊆ , I ) example, the generic filter converges to a real number r, called a random real. A name for the decimal expansion of r (in the sense of the canonical set of decimal intervals that converge to r) can be given by letting r = { ( Eˇ , E ) : E = [ k⋅10−n , (k + 1)⋅10−n ], 0 ≤ k < 10n }. This is, in some sense, just a subname of G.

To recover G from r, one takes those Borel subsets of I that "contain" r. Since the forcing poset is in V, but r is not in V, this containment is actually impossible. But there is a natural sense in which the interval [0.5, 0.6] in V "contains" a random real whose decimal expansion begins 0.5. This is formalized by the notion of "Borel code".

Every Borel set can, nonuniquely, be built up, starting from intervals with rational endpoints and applying the operations of complement and countable unions, a countable number of times. The record of such a construction is called a Borel code. Given a Borel set B in V, one recovers a Borel code, and then applies the same construction sequence in V[G], getting a Borel set B*. One can prove that one gets the same set independent of the construction of B, and that basic properties are preserved. For example, if B⊆C, then B*⊆C*. If B has measure zero, then B* has measure zero.

So given r, a random real, one can show that G = { B (in V) : r∈B* (in V[G]) }. Because of the mutual interdefinability between r and G, one generally writes V[r] for V[G].

A different interpretation of reals in V[G] was provided by Dana Scott. Rational numbers in V[G] have names that correspond to countably many distinct rational values assigned to a maximal antichain of Borel sets, in other words, a certain rational-valued function on I = [0,1]. Real numbers in V[G] then correspond to Dedekind cuts of such functions, that is, measurable functions.

Boolean-valued models

- Main article: Boolean-valued model

Perhaps more clearly, the method can be explained in terms of Boolean-valued models. In these, any statement is assigned a truth value from some complete atomless Boolean algebra, rather than just a true/false value. Then an ultrafilter is picked in this Boolean algebra, which assigns values true/false to statements of our theory. The point is that the resulting theory has a model which contains this ultrafilter, which can be understood as a new model obtained by extending the old one with this ultrafilter. By picking a Boolean-valued model in an appropriate way, we can get a model that has the desired property. In it, only statements which must be true (are "forced" to be true) will be true, in a sense (since it has this extension/minimality property).

Meta-mathematical explanation

In forcing we usually seek to show some sentence is consistent with ZFC (or optionally some extension of ZFC). One way to interpret the argument is that we assume ZFC is consistent and use it to prove ZFC combined with our new sentence is also consistent.

Each "condition" is a finite piece of information – the idea is that only finite pieces are relevant for consistency, since by the compactness theorem a theory is satisfiable if and only if every finite subset of its axioms is satisfiable. Then, we can pick an infinite set of consistent conditions to extend our model. Thus, assuming consistency of set theory, we prove consistency of the theory extended with this infinite set.

Logical explanation

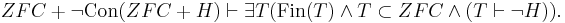

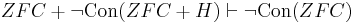

By Godel's incompleteness theorem one cannot prove the consistency of ZFC using only the axioms of ZFC, and consequently one cannot prove the consistency of ZFC+H for any hypothesis H using only ZFC+H. For this reason the aim of a consistency proof is to prove the consistency of ZFC + H relative to consistency of ZFC. Such problems are known as problems of relative consistency. In fact one proves

(*)

We will give the general schema of relative consistency proofs. Because any proof is finite it uses finite number of axioms.

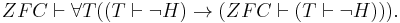

For any given proof ZFC can verify validity of this proof. This is provable by induction by the length of the proof.

Now we obtain

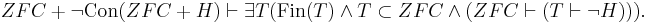

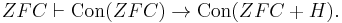

If we prove following

(**)

we can conclude that

which is equivalent to

which gives (*). Core of relative consistency proof is proving of (**). One have to construct ZFC proof of Con(T + H) for any given finite set T of ZFC axioms (by ZFC instruments of course). (No universal proof of Con(T + H) of course.)

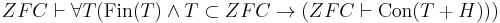

In ZFC is provable that for any condition p the set of formulas (evaluated by names) forced by p is deductive closed. Also, for any ZFC axiom ZFC proves that this axiom is forced by 1. Then proving that there is at least one condition which forces H suffices.

In case of Boolean valued forcing procedure is similar – one have to prove that Boolean value of H is not 0.

Another approach is using reflection theorem. For any given finite set of ZFC axioms there is ZFC proof that this set of axioms has countable transitive model. For any given finite set T of ZFC axioms there is finite set T' of ZFC axioms such that ZFC proves that if countable transitive model M satisfies T' then M[G] satisfies T. One have to prove that there is finite set T" of ZFC axioms such that if countable transitive model M satisfies T" then M[G] satisfies considered hypothesis H. Then for any given finite set T of ZFC axioms ZFC proves Con(T + H).

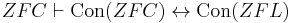

Sometimes in (**) some stronger theory S than ZFC is used for proving Con(T + H). Then we have proof of consistency of ZFC + H relative to consistency of S. Note that  , where ZFL is ZF + V = L (axiom of constructibility).

, where ZFL is ZF + V = L (axiom of constructibility).

See also

References

- Bell, J. L. (1985) Boolean-Valued Models and Independence Proofs in Set Theory, Oxford. ISBN 0-19-853241-5

- Cohen, P. J. (1966). Set theory and the continuum hypothesis. Addison–Wesley. ISBN 0-8053-2327-9.

- Grishin, V.N. (2001), "Forcing method", in Hazewinkel, Michiel, Encyclopedia of Mathematics, Springer, ISBN 978-1556080104, http://www.encyclopediaofmath.org/index.php?title=F/f040770

- Kunen, Kenneth (1980). Set Theory: An Introduction to Independence Proofs. North-Holland. ISBN 0-444-85401-0.

External links

- Tim Chow's article A Beginner's Guide to Forcing is a good introduction to the concepts of forcing that avoids a lot of technical detail. This paper grew out of Chow's newsgroup article Forcing for dummies. In addition to improved exposition, the Beginner's Guide includes a section on Boolean Valued Models.

- See also Kenny Easwaran's article A Cheerful Introduction to Forcing and the Continuum Hypothesis, which is also aimed at the beginner but includes more technical details than Chow's article.

- The Independence of the Continuum Hypothesis Paul J. Cohen, Proceedings of the National Academy of Sciences of the United States of America, Vol. 50, No. 6. (Dec. 15, 1963), pp. 1143–1148.

- The Independence of the Continuum Hypothesis, II Paul J. Cohen Proceedings of the National Academy of Sciences of the United States of America, Vol. 51, No. 1. (Jan. 15, 1964), pp. 105–110.